Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance

Related Articles: Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance

Introduction

With great pleasure, we will explore the intriguing topic related to Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance

- 2 Introduction

- 3 Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance

- 3.1 Understanding the Concept: Memory-Mapped Files

- 3.2 The Mechanics of Memory-Mapped Files: A Detailed Look

- 3.3 Benefits of Memory-Mapped Files: Streamlining Data Access and Manipulation

- 3.4 Applications of Memory-Mapped Files: Unveiling the Possibilities

- 3.5 Understanding the Limitations: Considerations for Optimal Usage

- 3.6 FAQs: Addressing Common Questions about Memory-Mapped Files

- 3.7 Tips for Effective Use of Memory-Mapped Files: Maximizing Efficiency and Security

- 3.8 Conclusion: The Power of Memory-Mapped Files in Modern Operating Systems

- 4 Closure

Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance

The realm of operating systems often involves intricate interactions between user applications and the underlying kernel. One such mechanism, known as memory-mapped files, provides a powerful and efficient way for processes to access and manipulate data residing in the kernel’s memory space. This article delves into the intricacies of memory-mapped files, exploring their fundamental workings, benefits, and potential applications.

Understanding the Concept: Memory-Mapped Files

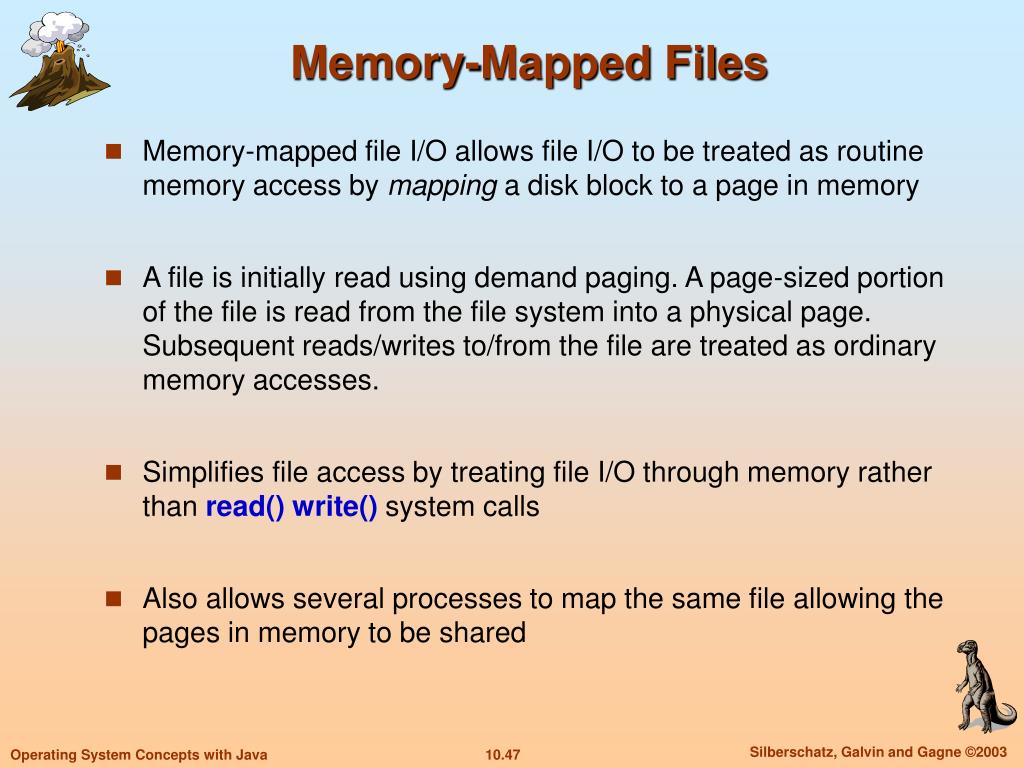

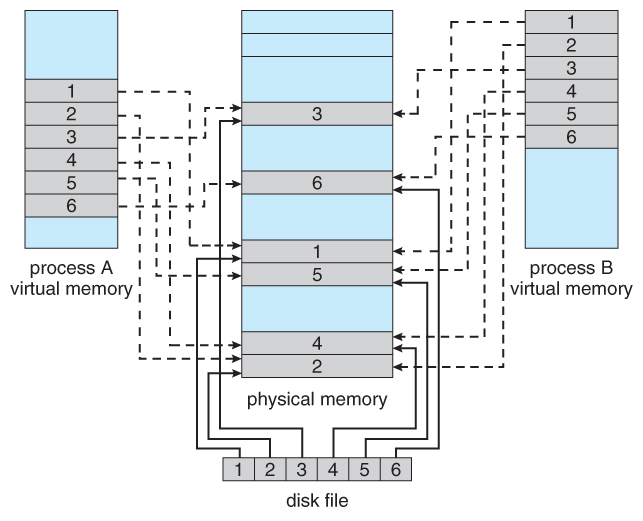

At its core, a memory-mapped file establishes a direct connection between a file stored on disk and a region of virtual memory within a process’s address space. This connection eliminates the need for explicit read and write operations, allowing processes to interact with the file’s content as if it were a regular memory block.

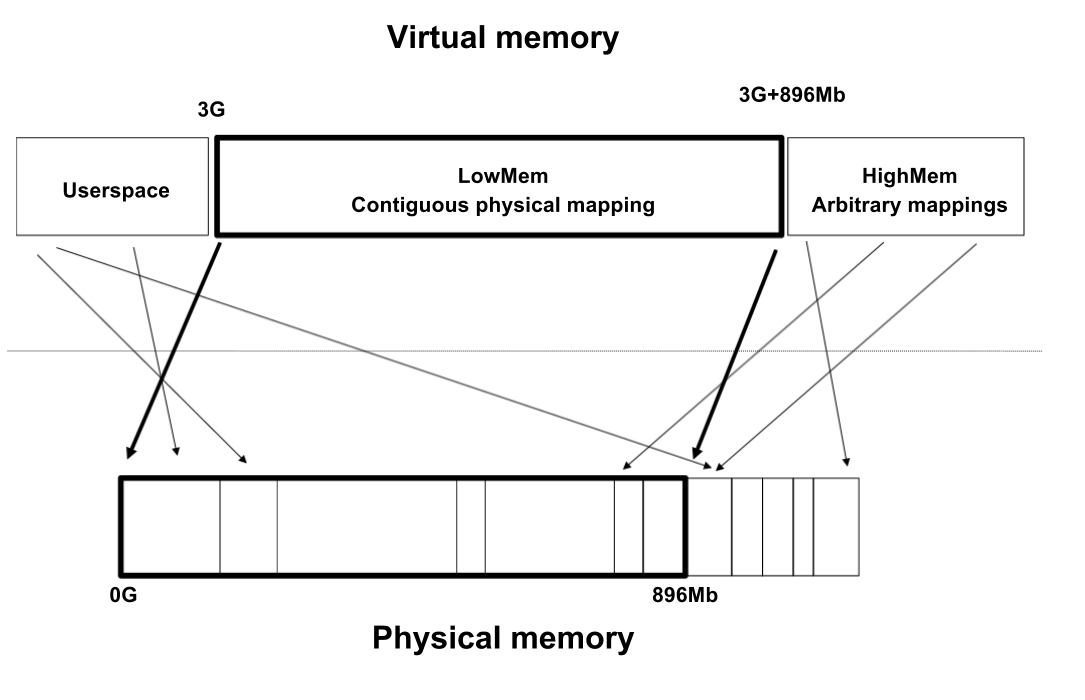

This concept relies on the operating system’s memory management capabilities, specifically the virtual memory system. Virtual memory provides an abstraction layer, allowing processes to access memory beyond the physical limitations of the system. When a process maps a file into its virtual memory, the operating system allocates a virtual address range for this file. However, the actual data does not reside in physical memory unless explicitly accessed.

Instead, the data remains on disk, with the operating system handling the necessary page swapping when a process attempts to access the mapped file. This dynamic approach ensures efficient memory usage, as only the actively used portions of the file are loaded into physical memory.

The Mechanics of Memory-Mapped Files: A Detailed Look

The process of creating a memory-mapped file involves several key steps:

-

File Open: The process first opens the desired file using standard system calls. This step establishes a file descriptor, a unique identifier for the file within the process’s context.

-

Mapping Request: The process then issues a system call to map the file into its virtual memory. This call specifies the file descriptor, the desired size of the memory region to be mapped, and the desired access permissions (read, write, or both).

-

Virtual Memory Allocation: The operating system allocates a virtual address range within the process’s address space to accommodate the mapped file. This virtual address range is typically aligned on a page boundary, ensuring efficient memory management.

-

Page Table Updates: The operating system updates the process’s page table to associate the allocated virtual addresses with the corresponding file pages. This mapping allows the process to directly access the file data through its virtual memory.

-

Data Access: The process can now treat the mapped file as a regular memory block, accessing its content using standard memory access instructions. Any modification to the mapped file is immediately reflected in the underlying file on disk.

Benefits of Memory-Mapped Files: Streamlining Data Access and Manipulation

Memory-mapped files offer numerous advantages over traditional file I/O methods, making them a valuable tool for various applications:

-

Simplified Data Access: Memory-mapped files eliminate the need for explicit read and write operations, simplifying data access and manipulation. Processes can directly access and modify file data using standard memory access instructions, significantly reducing code complexity.

-

Efficient Memory Usage: Only the actively used portions of the file are loaded into physical memory, minimizing memory overhead. This dynamic approach is particularly beneficial for large files where only a small portion is required at any given time.

-

Shared Memory: Memory-mapped files provide a mechanism for multiple processes to share data efficiently. By mapping the same file into the virtual memory spaces of multiple processes, these processes can access and modify the shared data directly, enabling inter-process communication without the overhead of traditional IPC mechanisms.

-

Improved Performance: By eliminating the need for explicit I/O operations, memory-mapped files can significantly improve performance, especially for applications that involve frequent file access. The direct memory access and efficient memory management contribute to faster data processing.

Applications of Memory-Mapped Files: Unveiling the Possibilities

Memory-mapped files find widespread use in various scenarios, showcasing their versatility and efficiency:

-

Database Systems: Memory-mapped files are often employed in database systems to manage large data files efficiently. By mapping the data files into memory, database systems can access and manipulate data directly, improving query performance and reducing I/O overhead.

-

Image Processing: Image processing applications often rely on memory-mapped files to handle large image files. By mapping these files into memory, image processing algorithms can access and manipulate image data efficiently, enabling faster processing and analysis.

-

Text Editors and IDEs: Text editors and integrated development environments (IDEs) frequently use memory-mapped files to provide real-time editing capabilities. By mapping the file being edited into memory, these applications can display the content instantly and reflect changes made by the user immediately.

-

Scientific Computing: Scientific computing applications often involve processing large datasets, where memory-mapped files can be invaluable. By mapping these datasets into memory, scientific algorithms can access and manipulate data efficiently, accelerating calculations and simulations.

Understanding the Limitations: Considerations for Optimal Usage

While memory-mapped files offer numerous benefits, they also have limitations that developers should consider:

-

Memory Overhead: Although memory-mapped files utilize memory efficiently, they still require a certain amount of memory overhead for managing the mapped file. This overhead can become significant for very large files or systems with limited memory resources.

-

Concurrency Issues: Multiple processes accessing the same memory-mapped file can lead to concurrency issues if proper synchronization mechanisms are not in place. Accessing and modifying shared data concurrently can result in data corruption or inconsistencies.

-

File System Limitations: The performance of memory-mapped files can be affected by the underlying file system. Certain file systems may not be optimized for memory mapping, potentially leading to performance bottlenecks.

-

Security Considerations: Memory-mapped files expose file data directly to the process’s memory space, potentially raising security concerns. Care must be taken to ensure that unauthorized processes do not gain access to sensitive data through memory mapping.

FAQs: Addressing Common Questions about Memory-Mapped Files

Q: What is the difference between memory-mapped files and traditional file I/O?

A: Traditional file I/O involves explicit read and write operations, where data is transferred between the file and the process’s memory space. Memory-mapped files, on the other hand, establish a direct connection between the file and the process’s virtual memory, allowing for direct access and manipulation of file data as if it were a regular memory block.

Q: Are memory-mapped files suitable for all applications?

A: Memory-mapped files are particularly beneficial for applications that involve frequent file access, large file sizes, or shared data manipulation. However, they may not be suitable for applications with strict memory constraints or those that require high levels of security.

Q: How do I ensure data consistency when multiple processes access the same memory-mapped file?

A: To ensure data consistency, appropriate synchronization mechanisms should be implemented when multiple processes access the same memory-mapped file. These mechanisms can include mutexes, semaphores, or other synchronization primitives, ensuring that only one process can access and modify the shared data at a time.

Q: What are the performance implications of using memory-mapped files?

A: Memory-mapped files can significantly improve performance for applications that involve frequent file access. The direct memory access and efficient memory management contribute to faster data processing. However, performance can be affected by factors such as the underlying file system and the overall system resources.

Tips for Effective Use of Memory-Mapped Files: Maximizing Efficiency and Security

-

Choose the Right File System: Consider the file system’s capabilities and performance characteristics when using memory-mapped files. File systems optimized for memory mapping can significantly improve performance.

-

Manage Memory Overhead: Be mindful of the memory overhead associated with memory-mapped files, especially for large files or systems with limited memory resources. Use techniques like file caching or selective mapping to minimize memory usage.

-

Implement Synchronization Mechanisms: When multiple processes access the same memory-mapped file, implement appropriate synchronization mechanisms to prevent data corruption or inconsistencies. Mutexes, semaphores, or other synchronization primitives can ensure that only one process can access and modify shared data at a time.

-

Prioritize Security: Address security concerns by implementing appropriate access controls and permissions for memory-mapped files. Ensure that only authorized processes can access and modify sensitive data.

Conclusion: The Power of Memory-Mapped Files in Modern Operating Systems

Memory-mapped files provide a powerful and efficient mechanism for processes to interact with data residing in the kernel’s memory space. By establishing a direct connection between files and virtual memory, memory-mapped files streamline data access, improve performance, and enable efficient shared memory communication. Understanding the intricacies of memory-mapped files and their limitations allows developers to leverage their benefits effectively, enhancing application performance and efficiency while ensuring data consistency and security. As operating systems continue to evolve, memory-mapped files remain a cornerstone of efficient and versatile data management, playing a crucial role in the smooth functioning of modern applications.

-660.jpg)

Closure

Thus, we hope this article has provided valuable insights into Navigating the Kernel: A Deep Dive into Memory-Mapped Files and Their Significance. We appreciate your attention to our article. See you in our next article!