Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files

Related Articles: Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files

Introduction

In this auspicious occasion, we are delighted to delve into the intriguing topic related to Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files

- 2 Introduction

- 3 Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files

- 3.1 Understanding the Essence of Memory-Mapped Files

- 3.2 Unraveling the Benefits of Memory-Mapped Files

- 3.3 Exploring the Practical Applications of Memory-Mapped Files

- 3.4 Navigating the Implementation Landscape: A Practical Guide to Memory-Mapped Files

- 3.5 Addressing Common Concerns: A Comprehensive FAQ on Memory-Mapped Files

- 3.6 Guiding Principles for Effective Memory-Mapped File Utilization

- 3.7 Conclusion: Embracing the Power of Memory-Mapped Files

- 4 Closure

Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files

In the realm of software development, efficient data management is paramount. The ability to access and manipulate large datasets seamlessly is crucial for applications ranging from data analysis and scientific simulations to multimedia processing and database systems. Traditional file I/O methods, while reliable, often fall short when dealing with substantial data volumes. This is where the concept of memory-mapped files emerges, offering a powerful and efficient approach to handling large files directly within the application’s memory space.

Understanding the Essence of Memory-Mapped Files

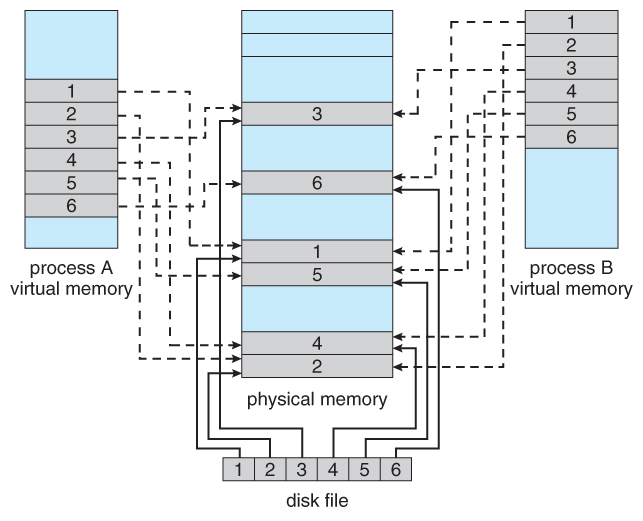

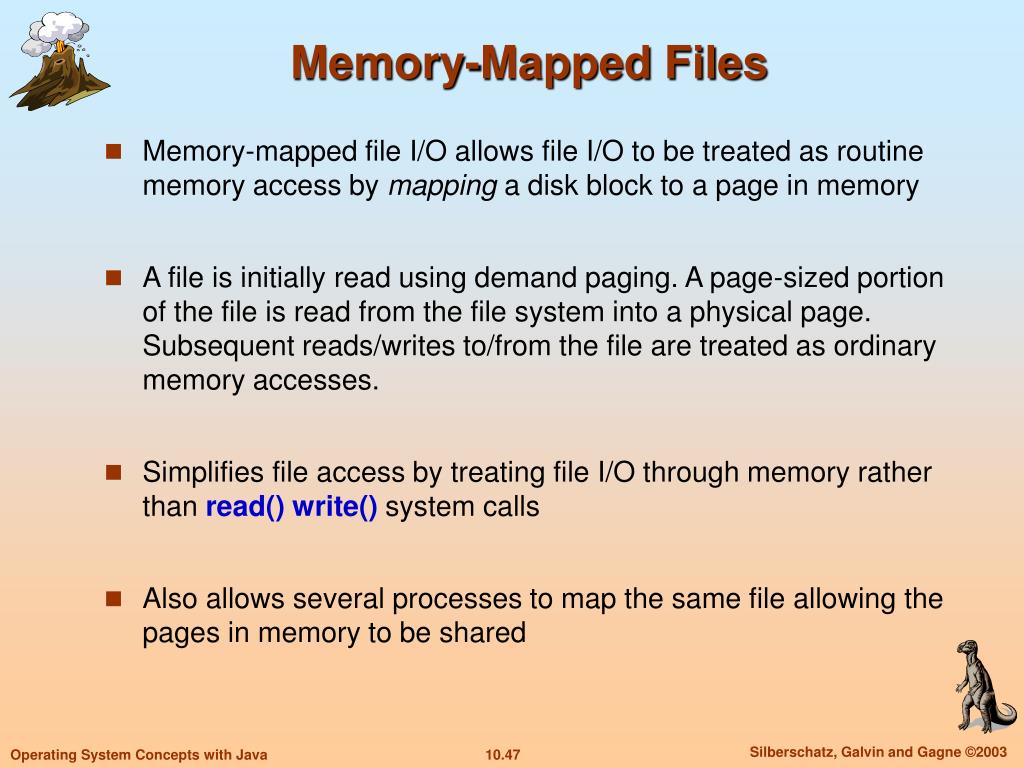

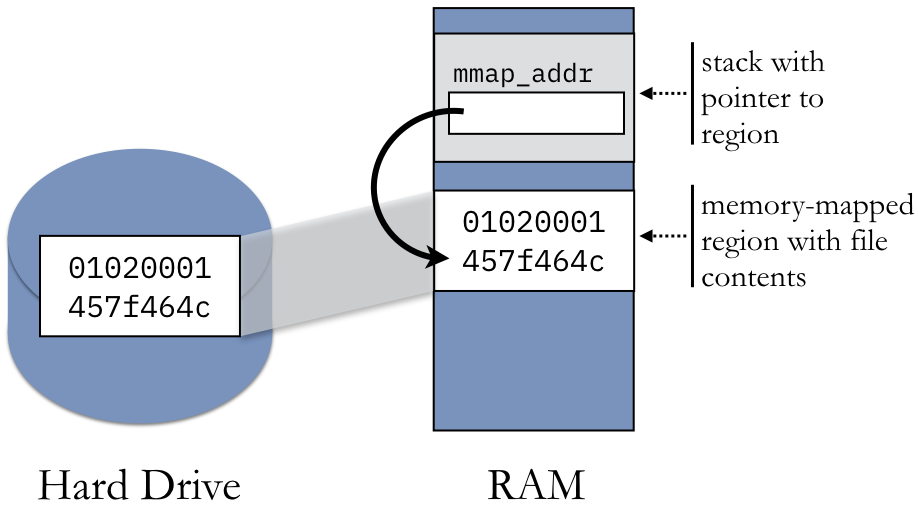

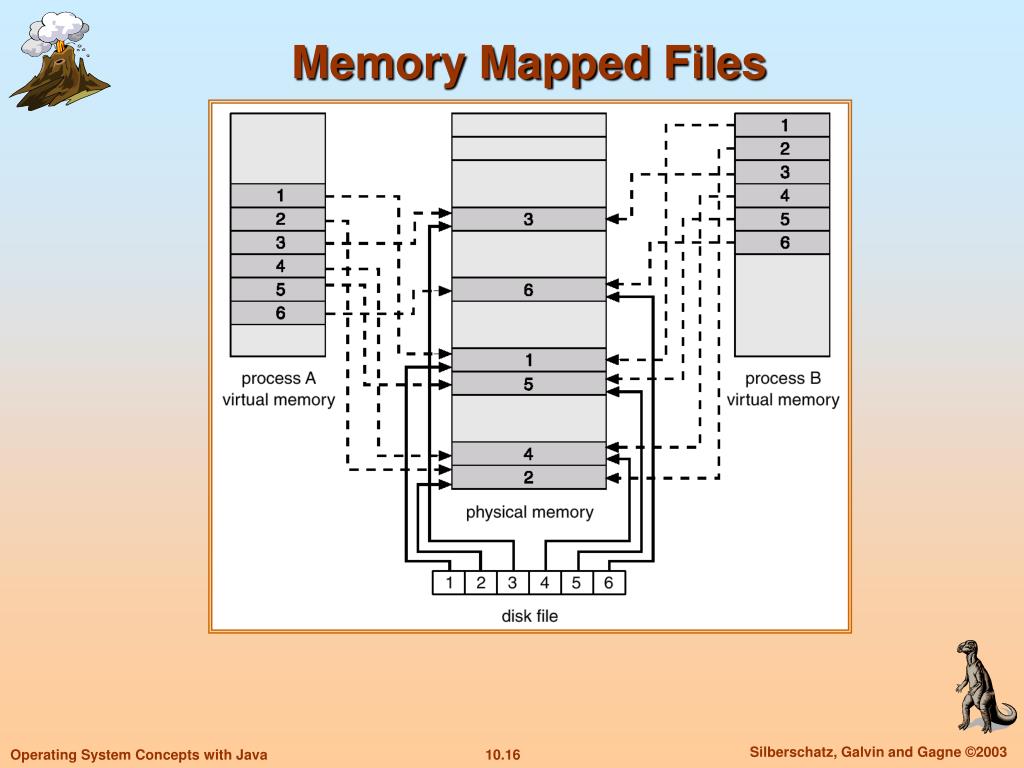

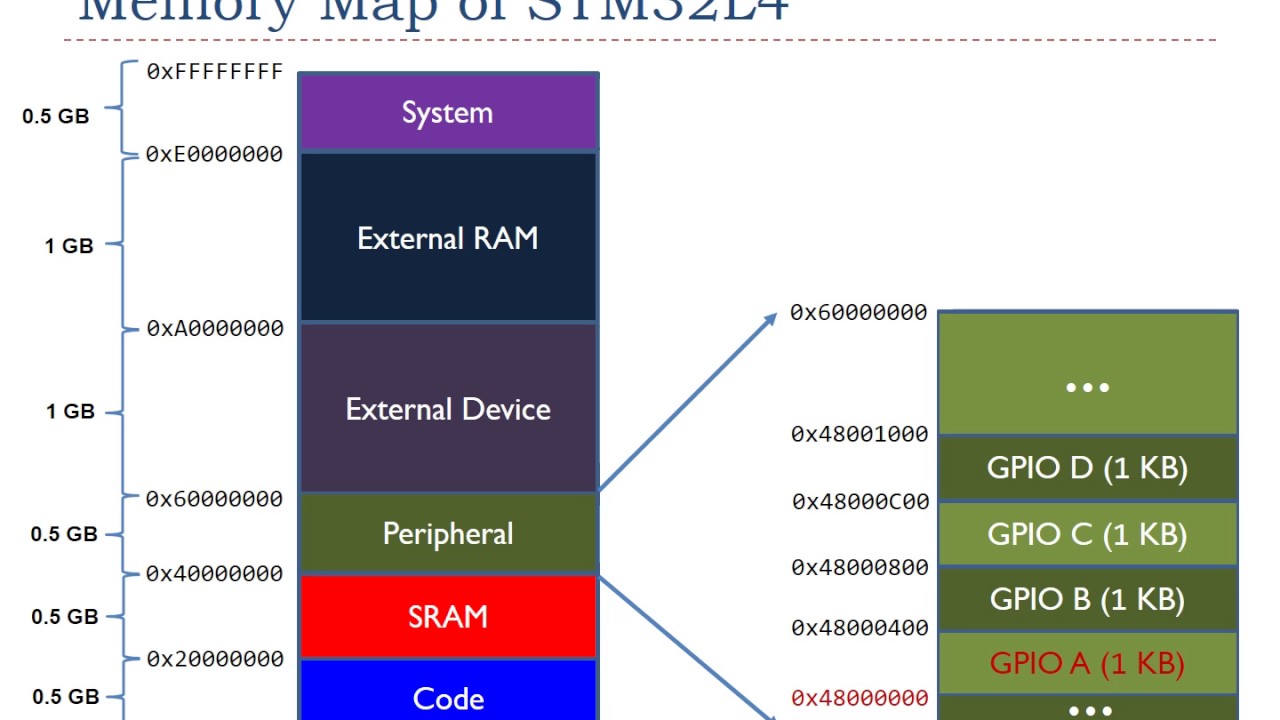

Memory-mapped files, often referred to as mmap files, bridge the gap between files stored on disk and the application’s memory. Instead of the conventional approach of reading data from a file into memory in chunks, memory mapping establishes a direct link between the file’s contents and a specific region of the application’s memory. This eliminates the need for explicit data transfers, enabling the program to treat the file as if it were a part of its own address space.

The core principle behind memory mapping lies in the creation of a virtual memory mapping. This mapping essentially creates a virtual address space within the application’s memory that mirrors the file’s content on disk. Any modifications made to this virtual address space are automatically reflected in the underlying file on disk, ensuring data consistency. This seamless synchronization between memory and disk eliminates the need for manual data copying, simplifying file handling and enhancing performance.

Unraveling the Benefits of Memory-Mapped Files

The adoption of memory-mapped files brings forth a multitude of advantages, making them a compelling choice for various applications:

-

Enhanced Performance: By eliminating the overhead associated with traditional file I/O operations, memory-mapped files significantly boost data access speed. The direct mapping eliminates the need for data transfers between memory and disk, leading to faster read and write operations, particularly beneficial when working with large datasets.

-

Simplified Data Management: The concept of treating files as part of the application’s memory space simplifies data handling. Programmers can access and manipulate file data as if it were regular memory, without the complexities of file I/O operations. This streamlined approach reduces code complexity and enhances maintainability.

-

Efficient Data Sharing: Memory-mapped files facilitate seamless data sharing between multiple processes or threads. Since the file is mapped to a shared memory region, multiple processes can access and modify the data concurrently, enabling collaborative data manipulation and synchronization.

-

Reduced Memory Footprint: Memory-mapped files offer a more memory-efficient solution compared to traditional file I/O methods. Instead of loading the entire file into memory, only the portion being actively accessed is mapped, minimizing memory consumption, especially when dealing with massive files.

Exploring the Practical Applications of Memory-Mapped Files

The advantages of memory-mapped files have made them an indispensable tool in a wide range of applications:

-

Data Analysis and Scientific Computing: Memory-mapped files are extensively used in data analysis and scientific computing applications that process large datasets. The ability to access and manipulate data directly in memory significantly accelerates computations, enabling faster data processing and analysis.

-

Image and Video Processing: Memory-mapped files are employed in image and video processing applications, allowing for efficient access to large image and video files, enabling real-time processing and manipulation.

-

Database Systems: Memory-mapped files play a crucial role in database systems, facilitating fast and efficient data access, especially for large databases. They enable direct manipulation of data within the database’s memory space, enhancing performance and scalability.

-

Text Editors and Word Processors: Memory-mapped files are often employed in text editors and word processors to enable seamless editing of large documents. The ability to access and modify the document directly in memory allows for responsive editing and eliminates the need for frequent file saving operations.

-

Multimedia Applications: Memory-mapped files are widely used in multimedia applications, such as audio and video players, to facilitate efficient streaming of large media files. The direct mapping enables continuous playback without interruptions, providing a smooth user experience.

Navigating the Implementation Landscape: A Practical Guide to Memory-Mapped Files

The implementation of memory-mapped files varies across different programming languages and operating systems. However, the core concepts remain consistent, involving the following steps:

-

Opening the File: The first step involves opening the file that needs to be mapped into memory. This typically involves using a file opening function provided by the programming language or operating system.

-

Creating the Mapping: Once the file is open, a mapping is created between the file’s contents and a region of the application’s memory. This mapping process usually involves specifying the size of the memory region, the access mode (read-only, read-write, or write-only), and the starting address of the file.

-

Accessing the Mapped Data: After the mapping is established, the application can access the file’s data directly through the mapped memory region. This eliminates the need for explicit file I/O operations, treating the file as if it were a part of the application’s address space.

-

Modifying Data: If the mapping allows for writing, the application can modify the data within the mapped memory region. These modifications are automatically reflected in the underlying file on disk, ensuring data consistency.

-

Unmapping the File: When the application has finished working with the memory-mapped file, it is crucial to unmap the file from memory. This releases the memory resources and ensures proper file handling.

Addressing Common Concerns: A Comprehensive FAQ on Memory-Mapped Files

Q: What are the limitations of memory-mapped files?

A: While memory-mapped files offer significant benefits, they do have certain limitations:

-

Memory Constraints: The size of the file that can be mapped is limited by the available memory. Mapping extremely large files might exceed the system’s memory capacity, leading to performance issues or even system crashes.

-

Data Consistency: In scenarios involving multiple processes or threads accessing the mapped file concurrently, data consistency issues can arise. Proper synchronization mechanisms need to be implemented to ensure data integrity.

-

Platform Dependencies: The implementation and behavior of memory-mapped files can vary across different operating systems and programming languages. Code portability might require adjustments to accommodate platform-specific differences.

Q: How do memory-mapped files compare to traditional file I/O?

A: Memory-mapped files offer significant performance advantages over traditional file I/O methods, particularly when dealing with large files. They eliminate the overhead associated with data transfers between memory and disk, leading to faster access and manipulation of data.

However, traditional file I/O methods are more flexible in terms of file handling, allowing for random access and partial reading or writing of data. Memory-mapped files typically require access to the entire file, making them less suitable for scenarios where only specific portions of the file need to be accessed.

Q: What are the security considerations associated with memory-mapped files?

A: Memory-mapped files introduce potential security vulnerabilities, particularly when dealing with sensitive data. Since the file is mapped into the application’s memory space, unauthorized access to the memory region could compromise the data. It is essential to implement appropriate security measures, such as access control mechanisms and data encryption, to mitigate these risks.

Q: How can I optimize the performance of memory-mapped files?

A: Several strategies can be employed to optimize the performance of memory-mapped files:

-

File Size: Mapping large files can lead to memory pressure. Consider breaking down large files into smaller chunks to reduce memory consumption and improve performance.

-

Access Pattern: If the application requires random access to different parts of the file, consider using traditional file I/O methods instead of memory mapping. Memory mapping is most efficient for sequential access patterns.

-

Synchronization: When multiple processes or threads access the same memory-mapped file concurrently, ensure proper synchronization mechanisms are in place to prevent data corruption.

Guiding Principles for Effective Memory-Mapped File Utilization

-

Assess File Size and Access Pattern: Evaluate the size of the file and the access pattern required by the application. Memory-mapped files are most beneficial for large files with sequential access patterns.

-

Consider Memory Constraints: Ensure the available memory can accommodate the file size before attempting to map it. Excessive memory consumption can lead to performance issues or system instability.

-

Implement Synchronization Mechanisms: If multiple processes or threads access the memory-mapped file concurrently, implement appropriate synchronization mechanisms to maintain data consistency and prevent race conditions.

-

Address Security Concerns: Implement robust security measures to protect sensitive data stored in memory-mapped files from unauthorized access.

Conclusion: Embracing the Power of Memory-Mapped Files

Memory-mapped files provide a powerful and efficient approach to handling large datasets directly within the application’s memory space. By eliminating the overhead associated with traditional file I/O operations, they enhance performance, simplify data management, and enable efficient data sharing. While they have limitations, such as memory constraints and potential security vulnerabilities, careful consideration of these factors and the implementation of appropriate strategies can effectively mitigate these risks.

The adoption of memory-mapped files offers a significant performance boost and streamlined data handling, making them an indispensable tool for applications demanding efficient and seamless access to large datasets. By understanding their principles, benefits, and limitations, developers can harness the power of memory-mapped files to enhance the performance and efficiency of their applications.

-660.jpg)

Closure

Thus, we hope this article has provided valuable insights into Navigating the Memory Landscape: A Comprehensive Exploration of Memory-Mapped Files. We thank you for taking the time to read this article. See you in our next article!